2021-10-11 New! -- As part of the mocap autolabeling package SOMA we release the MoSh++ code, capable of fitting SMPL-X to given mocap marker or even point cloud data.

2021-10-11 New! -- As part of the mocap autolabeling package SOMA we release the MoSh++ code, capable of fitting SMPL-X to given mocap marker or even point cloud data.

Marker-based mocap is widely criticized as producing lifeless and unnatural motions. We argue that this is the result of "indirecting" through a skeleton that acts as a proxy for the human movement. In standard mocap, visible 3D markers on the body surface are used to infer the unobserved skeleton. This skeleton is then used to animate a 3D model and what is rendered is the visible body surface. While typical protocols place markers on parts of the body that move as rigidly as possible, soft-tissue motion always affects surface marker motion. Since non-rigid motions of surface markers are treated as noise, subtle information about body motion is lost in the process of going from the non-rigid body surface to the rigid, articulated, skeleton representation. We argue that these non-rigid marker motions are not noise, but rather correspond to subtle surface motions that are important for realistic animation.

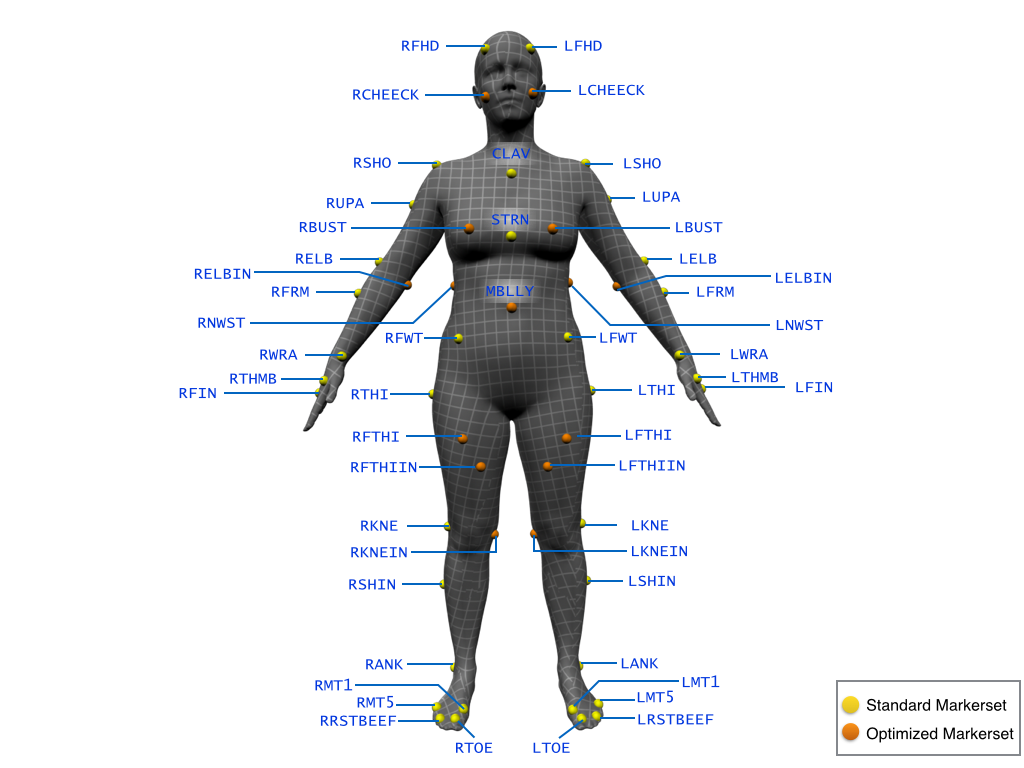

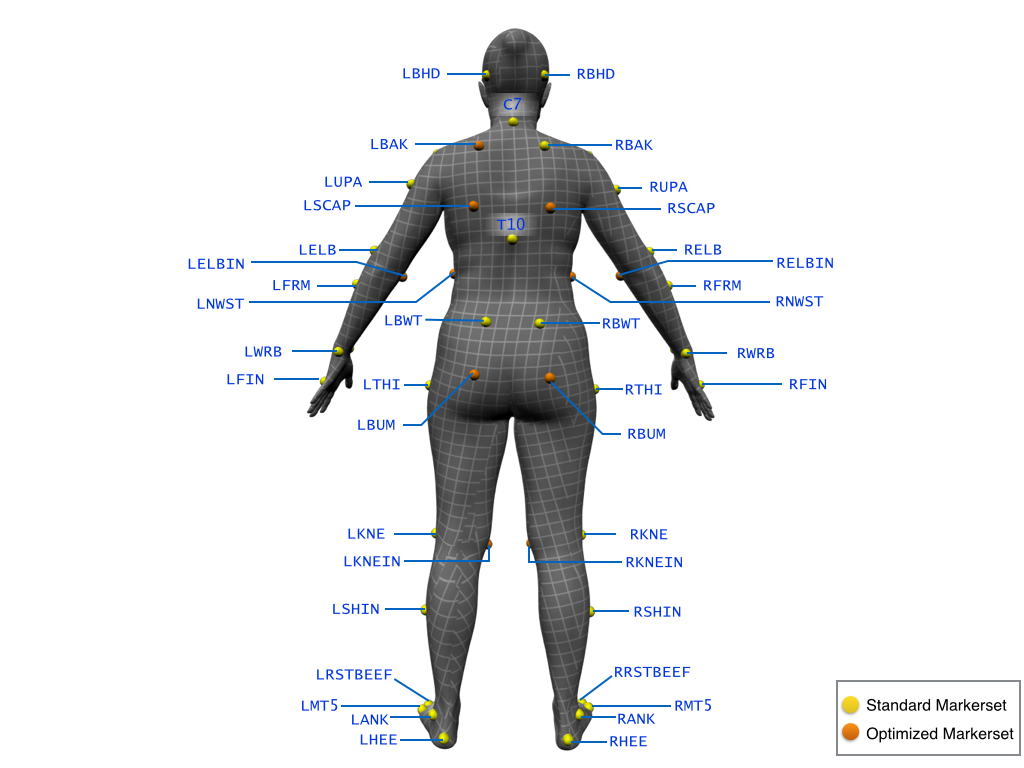

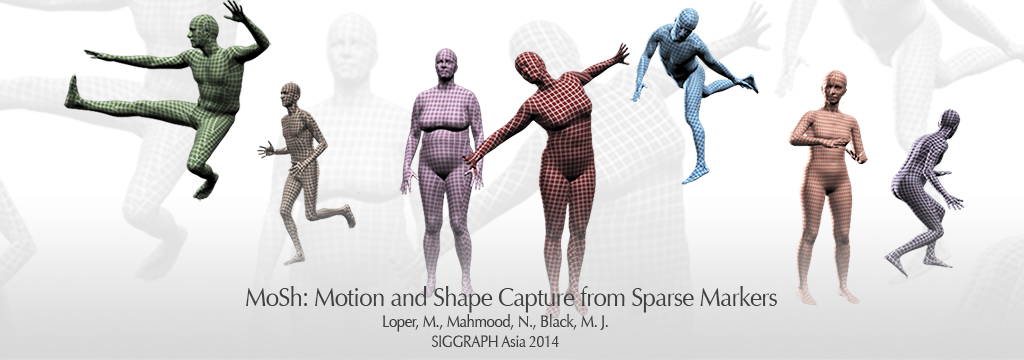

We demonstrate a new approach called MoSh (Motion and Shape capture), that automatically extracts this detail from mocap data. MoSh estimates body shape and pose together using sparse marker data by exploiting a parametric model of the human body. In contrast to previous work, MoSh solves for the marker locations relative to the body and estimates accurate body shape directly from the markers without the use of 3D scans; this effectively turns a mocap system into an approximate body scanner. MoSh is able to capture soft tissue motions directly from markers by allowing body shape to vary over time.

We evaluate the effect of different marker sets on pose and shape accuracy and propose a new sparse marker set for capturing soft-tissue motion. We illustrate MoSh by recovering body shape, pose, and soft-tissue motion from archival mocap data and using this to produce animations with subtlety and realism. We also show soft-tissue motion retargeting to new characters and show how to magnify the 3D deformations of soft tissue to create animations with appealing exaggerations.

Video

Referencing the Dataset in your work

Here are the Bibtex snippets for citing the Dataset in your work.

Main paper and benchmark:

@article{Loper:SIGASIA:2014,

title = {{MoSh}: Motion and Shape Capture from Sparse Markers},

author = {Loper, Matthew M. and Mahmood, Naureen and Black, Michael J.},

address = {New York, NY, USA},

publisher = {ACM},

month = nov,

number = {6},

volume = {33},

pages = {220:1--220:13},

abstract = {Marker-based motion capture (mocap) is widely criticized as producing lifeless animations. We argue that important information about body surface motion is present in standard marker sets but is lost in extracting a skeleton. We demonstrate a new approach called MoSh (Motion and Shape capture), that automatically extracts this detail from mocap data. MoSh estimates body shape and pose together using sparse marker data by exploiting a parametric model of the human body. In contrast to previous work, MoSh solves for the marker locations relative to the body and estimates accurate body shape directly from the markers without the use of 3D scans; this effectively turns a mocap system into an approximate body scanner. MoSh is able to capture soft tissue motions directly from markers

by allowing body shape to vary over time. We evaluate the effect of different marker sets on pose and shape accuracy and propose a new sparse marker set for capturing soft-tissue motion. We illustrate MoSh by recovering body shape, pose, and soft-tissue motion from archival mocap data and using this to produce animations with subtlety and realism. We also show soft-tissue motion retargeting to new characters and show how to magnify the 3D deformations of soft tissue to create animations with appealing exaggerations.},

journal = {ACM Transactions on Graphics, (Proc. SIGGRAPH Asia)},

url = {http://doi.acm.org/10.1145/2661229.2661273},

year = {2014},

doi = {10.1145/2661229.2661273}

}